This article is out-of-date! Please look at this article, or articles written after June 2016.

Hey guys,

As I said in my previous post, aside from my main work on a smalltalk research virtual machine, I decided 3 months ago to allocate 1/5th of my time to work on the Cog VM. After a discussion with its core designer and developer, Eliot Miranda, it was decided that I will spend time on Cog’s adaptive recompiler: Sista.

In this post and on all future posts about Sista, I will talk about what I am implementing. Therefore, it may be that I will not talk about the best solution for a specific problem, but I will talk about a solution that works and that is good enough. It may also occur that what I am doing is going in the wrong direction and that part of the project may, one day or the other, fail. However, I’m very confident in the success of the Sista, this is why I start this blog post chronicles.

Plan

- What is an adaptive recompiler ?

- What is the Sista approach ?

- What are the different steps, is there release date ?

What is an adaptive recompiler ?

An adaptive recompiler is a tool that translate on-the-fly at runtime a code snippet into another code snippet that is faster to execute, based on analysis of the environment and the previous executions of the code snippet.

Even if a software application is big, usually at most 10% of its code is frequently used. Therefore, the optimizations, wasting memory in exchange for speed, should focus on this 10%, and not on 100% of the system. Efficient virtual machines feature a hot spot detector, a tool that permits to detect portions of code that are frequently used. After a few iterations, the hot spots are converted from virtual code (such as byte code) to native code, to remove the interpretation overhead and to add inline caches. Then, based on the first executions, efficient virtual machines have an adaptive recompiler, that recompiles these portions of code into a faster code. The adaptive recompiler optimization process is triggered by the JIT on very hot spots. For example, java hot spot or javascript V8 have an adaptive recompiler as part of their JIT infrastructure.

Aside from optimizing methods, an adaptive recompiler should have a deoptimizing infrastructure, to allow the user to debug the code as if the adaptive recompiler was not present and to deoptimize then reoptimize a method if its common execution case has changed (the code was firstly optimized based on its first executions, but if the use case of the first execution become obsolete, you need to recompile again the method in a different way).

To understand well how things work, let’s sum up how a VM usually optimize portions of code:

- step 1 (portions of code rarely used): the code is interpreted

- step 2 (portions of code sometimes used): the code has been compiled on-the-fly to native code and the native code is run

- step 3 (portions of code used often): the code has been recompiled into a better native code with optimizations *fast* to execute based on previous executions common cases

- step 4 (portions of code used almost all the time): the code has been recompiled into a better native code with optimizations *slow* to execute, based on previous executions common cases

The difference between the step 3 and step 4 is that in step 3 you will perform optimizations that are quickly perform, therefore the code is quickly recompiled into something better, whereas in step 4 the generated code will be faster but the recompilation may take a while, so you need to be sure that this hot spot is really really hot.

Lastly, the activation of these optimized methods is quite complex. Let’s say you have the method:

Interpreter>>interpret

[interpreter interpretNextInstruction] repeat

Clearly in this case the block will be executed a lot of time, so we want to optimize it. Now, if we only compile an optimized version of this method and wait for the next activation of the method for the optimized method to be run, the optimization would have been useless, because it is very unlikely that the interpreter will stop interpreting code, therefore it will always use the same unoptimized old method of the first activation. It may also happen that you run a method millions of times to do a specific operation, and then never use it again. Therefore, optimizing a method and then wait for it next execution to run the optimized code is not good enough. The solution for this problem is to edit the stack after recompiling a method into an optimized one, in order to remove unoptimized stack frames/contexts and replace them by an optimized context.

What is the Sista approach ?

With the previous ideas I explained, one can understand that the Sista may greatly improve the speed of Pharo. Of course I’m not going to gain 100x boost, because we are only 2 guys spending part of our time on this project (me with the help of Eliot). But I believe we can get some performance boost.

In the case of Pharo and Cog, the Sista requires 2 tools:

- a hot spot detector

- an optimizer/deoptimizer

Currently, in Cog’s JIT, methods are compiled to native code on-the-fly when they are called if they’re already on the global lookup cache. This process is good enough to detect portions of code needing to be jitted (it detects code executed 2 or 3 times in a certain amount of time). However, this is not good enough for the adaptive recompiler, which needs to know how many times the portion of code was executed, detecting a hot spot for example when a method is executed several thousands times in a certain amount of time. On the other hand, the hot spot detector has to detect methods that are frequently used without reducing too much the overall performance of the system. Eliot Miranda had already a good prototype for this. Basically, the Sista Cogit adds counters in some places in the native code, and when a counter reach a certain limit, it triggers the adaptive recompiler. These counters add a few overhead in the code execution (seemingly 15% overhead), but the adaptive recompiler will allow us to earn much more performance than the few that is lost.

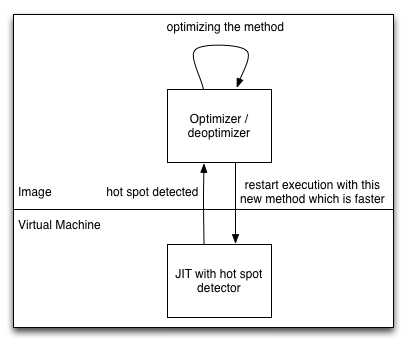

The optimizer/deoptimizer has to be able to recompile methods, optimizing them with type feed-back result from the native code inline caches. It was decided, to make it as simple as possible, to implement this part in the Smalltalk image. Therefore, a virtual machine hook will trigger the in-image optimizer/deoptimizer from the JIT.

In the beginning, the Sista will have only 1 optimization step: the methods will be recompiled on the fly after 30000 executions.

On hot spot detection, the in-image optimizer will analyse the stack, find a good method to optimize, optimize it, edit the stack to put the optimized method instead of the slower method, and restart the execution. The method is optimized based on the inline caches data which provides type-feedback information. The optimized method has access to an extended byte code set with extras byte codes tipping the JIT on how to produce better machine code.

What are the different steps, is there release date ?

Currently a prototype of the hotspot detector is working. I guess Eliot will finish it at some point if I finish something in the image :).

On my side, I am working on the in-image optimizer infrastructure, which I will introduce in a future post (Sista chronicles III). Then I’ll work on a few optimizations (step 1 described later in this post). The next step will be to fix the on-the-fly stack frames replacement (when recompiling a method, you need to edit the current execution state so it uses the optimized method and not the old one) and work on the deoptimization process. Last step is to add more optimizations and to add some kind of dependency management in the image (as you know, in Smalltalk, we always compile method at runtime, therefore we need to unoptimize methods that had inlined the method freshly recompiled).

Here are the optimizations I plan to implement and their effect for Pharo users:

- step 1: basic inlining of quick methods, methods, block. Here users will gain performance from block inlining.

- step 2: basic dead code elimination and copy propagation. Here the user will gain additional performance.

- step 3: new bytecode set, inlining of primitives, addition of unchecked primitive. Here the users will gain both performance and flexibility in the system (for example, this fix will remove the 255 max instance variable limitation and increase the maximum number of literals of a method).

Then, one could look at:

- very hot spot optimizations, such as loop unrolling, instruction scheduling mixed with tail called recursion, …

- polymorphic inline cache optimizations, such as message splitting to improve the quality of inlining and method customization

- improvement of all the algorithms in general, I am implementing all of them in a working but not perfect way, so for example people could improve the SSA / deSSA algorithm.

- Floating-pointer fast arithmetic

- …

Hope you enjoyed this first post 🙂

Note: Not so smart questions

Virtual machine is a very specific domain, requiring very deep knowledge to understand it fully. Some virtual machine optimizations are *not* intuitive at all. For example, a few time ago, I thought that increasing the size of the native code cache of Cog JIT would speed up the system, because native code is faster to execute than interpreted code, so having more would speed more the system. But it is not the case at all, because the cpu instruction cache logic has problem with a big amount of native code. Therefore having only hot spots in native code and the rest of the code interpreted is better in the current Cog VM (growing the cache from 1Mb to 2Mb decrease the performance by around 40%). Due to its specificity, people that does not know a lot about virtual machine ask always the same questions and *cannot easily* understand the answers. To have answers about these kind of questions, please read the virtual machines literature. Therefore I will not validate nor answer questions in comments (in all the Sista chronicles) that does not go into a productive direction, but instead I forward you to the virtual machines literature. For example, I will not allow questions such as: “I don’t believe in adaptive recompilers. Why don’t you do these optimizations in a static compiler with type inference algorithm ?”.

But don’t worry, you can find answers by yourself about that in the virtual machine literature ;-). Look for example at the Self VM papers to understand why adaptive recompilation is so useful.

This is really great to hear that you’re working on Sista. I was beginning to wonder if this was going to happen, ever! I’ve been following Eliot’s ideas for this for a long time. God speed!

Basically I took the existing prototype (I don’t know who exactly implemented it) and I am improving it with the help of Eliot to reach a production-ready state. I believe Eliot has always wanted to work on sista, but there are many things that has to be done before in the customer’s point of view: better memory management, 64 bits, multithreading.

I’m going to visit Eliot in April to work with him so there will be massive progress at this point. I hope the project will succeed. Stay in touch

For the uninitiated, Sista comes from Aosta. Here is a video link where Eliot Miranda presents his ideas.

The video is rich in ideas, but it is old so treat it as a primer. I’m sure that Eliot has refined his thinking since he gave this presentation.

Well what we are doing as a first step is quite similar: inlining blocks and unchecked primitives. Personally I then want to go in the direction of dead code elimination, copy propagation and value numbering because they are easy to implement on the SSA IR, but Eliot wants then to implement fast floating pointers arithmetics. We will see …

Which virtual machine literature do you recommend? 😉 Maybe a post with a list of book names and papers to read for the starter/novice/noob would be great!

(and it would be good to share knowledge with the team 😉

Well I share knowledge I answer all the questions any one ask.

A good introduction to VMs is the virtual machine / bytecode compiler section in the blue book. Then the best book to me is Urs Hozle thesis, he explain some complex ideas but it is written in such a nice way that it is easy to understand.

Blue book:

Click to access Bluebook.pdf

Urs Hozle phd:

http://www.cs.ucsb.edu/~urs/oocsb/self/papers/urs-thesis.html

Pingback: 5000 views ! Thank you ! | Clément Béra

Pingback: The Sista chronicles V: First optimized method running | Clément Béra

How might customization work in this architecture? The cooperation between VM and image seems to rely on a one-to-one mapping between an unoptimized bytecode method and an optimized bytecode method.

This is a very good question. Glad to see people like you interested in the project 🙂

It is difficult to answer for me as right now there’s no customization. But a few weeks ago I had my first optimized method running with this scheme, and I noticed that customization is missing but important (there are quite some cases where the optimizer cannot perform well due to this missing feature).

Image-side, I (will) have some kind of dependency map to know what optimized method to discard when a new method is compiled. The way I think I will implement customization is by adding to this dependency map a link between a method and its virtual copies. A copy will have in the deoptimization metadata a reference toward its original version. Each virtual copy could be optimized separately by the optimizer, but the access to the customization mapping is provided for deoptimization (and maybe for optimizations if needed, I don’t know).

Now as I said I have not implemented it yet so I may be wrong. I may end up with a too large memory footprint too. If you have some idea please discuss them here.

If you maintained specialized versions of methods in the optimizer, they would only be used if they got inlined into an optimized method though, right? They couldn’t be the target of an interpreted send or an unoptimized JITd send because the VM wouldn’t know about them.

It may be that customization isn’t that important for Smalltalk. My intuition is that customization is much more important for its cousins Self and Newspeak, where even instance variables and globals are accessed through sends. Given a constant receiver class, one could inline slot accessors in a single pass and compile them as reads and writes of instance variables and globals–bringing Self and Newspeak to the position where Smalltalk started.

Given the relative simplicity of looking up the self/super/outer sends in a method to check if they are slot accessors, I am imagining this as happening in the non-optimizing JIT, rather than the in-image optimizer, to be profited from when called from interpreted or unoptimized compiled methods as well. But I’m just speculating here 🙂 It might be that it is not worth inlining these accessors before the method trips the counter and goes to the in-image optimizer. It might also be that customization is profitable even for Smalltalk because it reduces polymorphism elsewhere. Or it might just lead to an explosion of code and trash instruction-cache performance. Difficult to say until there are implementations to compare. Anyway, food for thought 🙂

“If you maintained specialized versions of methods in the optimizer, they would only be used if they got inlined into an optimized method though, right?”

Ah ok that was the question I didn’t get it. Well I was thinking of installing these specialized methods in the method dictionary (as for optimized methods) and mark them in a way that the IDE will not display them. So the VM has full access to them. Anyway, as you may have noticed, with this in-image implementation I have to make the IDE a little aware of the optimizations to hide them from the programmer (What if a programmer executes thisContext inspect ? What if he wants to see the byte code of a method in a method browser ?).

As you said it may worth it to customize the methods and inline the accessors in the non optimizing JIT. We discussed about it with Eliot but there’s quite some work to do that (What does happen when you recompile an accessor ? Do I end up with a code blow-up in the native code zone ?) and we’re not sure we will earn much for smalltalk. To keep the VM “simple”, we want to add only optimizations that really worth it. So right now NewSpeak will have to wait for the in-image optimizer to inline slot access.

I thought about customization to reduce polymorphism. Note that I am able to inline a PIC if all the potential receiver classes target the same method. In my experiments there are very few PICs remaining (less that megamorphic inline caches). But right now the JIT have an early promotion feature: if a selector has already a megamorphic inline cache in the machine code zone, then it grows directly from monomorphic inline cache to megamorphic inline cache without using a PIC in between. That may be why I have this kind of result.

By the way are you working on V8 / Dart ? If so, I was wondering if you were planning to do some kind of technical talk in the next months in Europe ?

True, one could do copying down of methods into subclasses’ method dictionaries in the image. Presumably one would not do this for every method given the hundreds of methods on Object, and either set a bit in the header of the methods that are customized or not let the VM rely on it.

Thinking about the methods being in the image makes me notice that a nice property of the optimizer being bytecode-to-bytecode is the optimizations will survive when the machine code version of a method gets flushed and be immediately available next time it gets JIT’d. This is probably good for start-up time.

I do work on Dart, but not on the VM’s backend, so I have only a vague idea of what goes on there. (And I have no plans to travel.) Dart and V8 have it easier than Cog in that they don’t have to deal with methods being redefined or activations being modified, so Strongtalk may be the more relevant model as maintaining de-opt and dependency info efficiently seems like it would be the hardest part of this project.

Yeah one selling point is that the platform independent optimizations are saved when the image is saved and at the next start-up the image is already in a hot state. But this means I need to care not to have too many optimized methods.

I don’t think maintaining dependency infos would be that hard. I can do most of it in Smalltalk and the model explained in Urs’ phd for the Self VM seems relevant to me. Strongtalk is relevant too but the Self documentation is much better. To me the hardest part is to have deoptimization stable with a low memory footprint.

But I’m confused, in the Dart debugger you can redefine methods or edit method activations on-the-fly, can’t you ? I cannot believe that you need to restart the VM in as in the edit-compile-run vintage development process ?

Pingback: Squeak / Pharo VM documentation links | Clément Béra

Pingback: Testing unsafe operations | Clément Béra